When building data pipelines, you quickly realize how dependent they are on parameters, from source paths, to thresholds, to column mappings, and more. It usually starts off simple: you’re prototyping in a notebook, trying to avoid hardcoding too much directly in your logic by adding a few basic parameters like environment, table names, connection details, … Later come some filter conditions, maybe a bit of conditional logic, or even strategy patterns if you’re into software engineering.

Before long, your pipeline is up and running locally or in dev, and your parameters are scattered all over the notebook. Some settings are still hardcoded, others are duplicated or inconsistently applied, and now you’re scrolling through the notebook to find a parameter you want to adjust for testing.

Sounds familiar?

The problem with hard-coding parameters and how to solve them

At first, it might seem harmless to define these parameters directly in your notebook or main file, as long as it’s not sensitive data, of course. But as your pipeline grows in complexity or you begin to support multiple environments (like development, staging, and production), hard-coding parameters is not the quick win it was at first:

- As the complexity of pipelines grows, the number of parameters usually grows with it. Rather than having a long list of parameters (if they’re not scattered about your notebook of course), it’s easier to group values based on, for example, the dataset that needs to be processed by your pipeline. A dataclass or pydantic dataclass object can nicely encapsulate your configuration and makes access to its attributes easy and readable.

- Hard-coded values are difficult to reuse across environments. When switching from dev to prod, you’ll often need different credentials, file paths, or tuning parameters. While you can make these values conditional depending on the environment, you already know it’s easier if you would have, for example, a YAML configuration file per environment.

Step 1: Encapsulate your configuration in dataclasses

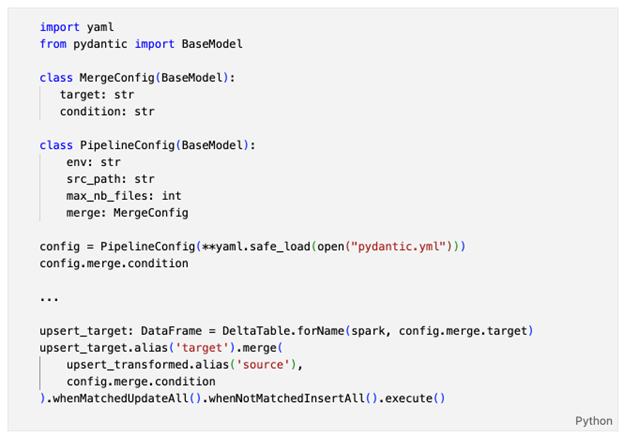

When creating your pipeline notebook or file, start by creating a pydantic dataclass in which you encapsulate your pipeline parameters as attributes. These dataclasses allow you to easily define your configuration object and add attributes throughout your development. Below is a snippet of a simple dataclass with object instantiation and how you can access the config attributes.

Now you have a centralized place to put and access your parameters, simple right? This will make the next step of moving your configuration values to a separate configuration file much simpler.

Step 2: Move parameters to a dedicated (YAML) configuration file

To simplify parameter management, it’s easiest to externalize your parameters into a separate configuration file. This allows you to change the behavior of your pipeline without modifying your code, making it cleaner and more adaptable.

Avoid putting secrets like passwords or API keys in your config files. Those should live in environment variables or a secure secret store.

While JSON might be one of the first formats that you think of for a configuration file, consider YAML (YAML Ain’t Markup Language), as it offers a couple of interesting features:

- More readable

- Support for comments, which is helpful for colleagues (and your future self)

- Flexible structure that can easily handle nested objects, lists, multi-line strings, …

Multi-line strings are truly an underrated feature that can be great for data engineering. Consider a pipeline that uses PySpark where the same pipeline can be used to process different datasets. Suppose now you want to apply a filter using SQL and for some datasets you have a quite complex filter on multiple columns. Using YAML, you can have the SQL statement formatted nicely and easily readable as shown below. Below we have a snippet of a SQL statement from a YAML config and how it could be used in a PySpark pipeline for a merge condition.

Step 3: Validate your configurations

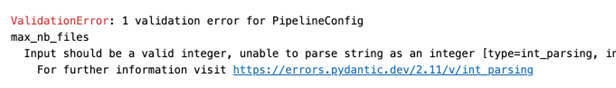

While these configuration files are truly great, they need to be valid. Unfortunately, a small typo or the wrong data type can lead to subtle bugs.

For example, using

enable_feature: "false"

in your configuration file looks fine at a first glance, but in Python a non-empty string (even “false”) evaluates to True. Python is a dynamically typed language and doesn’t know you wanted a Bool instead of a string. If you’re not validating input types and format, you might thus activate an unexpected feature and configure your pipeline differently then intended.

This is exactly why we advise using the pydantic library, since it allows you to define a schema and validate the config even before running the pipeline. Your validation can look as complex and elaborate as you want, so make sure to check out the pydantic package! As you can see, changing the value for max_nb_files from 100 to ten results in a validation error to help avoid the beloved Friday afternoon bug-hunts:

Blocking misconfigurations before your pipeline is executed, ensures you know exactly what to expect from your PipelineConfig instance. You could even add validation of your configs to the deployment pipeline to ensure only valid configurations are deployed to staging and, more importantly, production.

Final thoughts

Configuration management is far from the sexiest part of building data pipelines, yet it’s one that pops up sooner or later. In your next project, make sure to start off by encapsulating your configuration parameters for easy access. Once you’re POC is working, move the configuration values to dedicated YAML files and validate them with pydantic, so you know your pipeline is doing what you intended, even if your colleagues start messing around in the configs!