Cet article dont la lecture vous prendra 5 minutes a été rédigé par 2 étudiants de troisième cycle en intelligence artificielle appliquée à l’Université Érasme des Sciences Appliquées et des Arts de Bruxelles. Ensemble, nous avons commencé notre stage de quatre semaines, le 14 mars, chez Micropole Belux.

Résumé

Pendant notre stage, nous avons créé un modèle de détection de piscines. Nous avons construit ce modèle sur la base d’images satellites que nous avons trouvées sur le web. Comme nous travaillions dans l’équipe Microsoft Azure de Micropole, nous avons utilisé la plateforme Microsoft Azure Cloud pour toutes nos tâches.

Présentation étape par étape de la création d’un modèle de détection de piscines

Où et comment avez-vous obtenu les données ?

La première étape du processus consistait à collecter des données ou extraire des images du site zoom.earth. En utilisant un jeu de données préexistant reprenant toutes les adresses en Belgique, nous avons déjà pu commencer à extraire des données, car elles contenaient non seulement l’adresse, mais aussi la longitude et la latitude de l’endroit. L’adresse URL zoom.earth qui se trouvait dans la barre de recherche de votre navigateur comportait ces fonctions (longitude = rectangle noir et latitude = rectangle jaune), nous avons donc pu les utiliser pour nous diriger vers l’adresse URL correcte.

Nous avons décidé d’explorer la plateforme Azure Cloud et nous avons commencé à utiliser la composante Azure Functions. Après avoir fait des fouilles dans JavaScript, nous avons écrit un code local qui était capable de naviguer vers l’adresse URL de l’emplacement spécifié puis de faire une capture d’écran de cette page web. L’étape suivante consistait à enlever les éléments inutiles qui étaient visibles dans la capture d’écran et redimensionner la page web avant de prendre la capture d’écran telle qu’elle est vue sur l’image satellite ci-dessous.

Après avoir exécuté notre code JavaScript local, nous avons obtenu le résultat suivant :

C’est là que nous avons commencé à rencontrer des difficultés, car nous voulions enregistrer la capture d’écran de la page web dans un compte de stockage Azure (le système de fichiers utilisé dans Azure) tout en l’exécutant localement. Nous ne sommes pas parvenus à trouver comment arriver à nos fins avec cette approche, et comme nous n’avions que 4 semaines pour cette mission, nous avons commencé à explorer d’autres options.

Notre seconde option était Selenium, un outil de tests pour le web. En utilisant cette bibliothèque dans Python, nous pouvions faire exactement les mêmes choses que dans JavaScript. Toutefois, la principale différence se situe au niveau du stockage de l’image. Comme nous n’utilisions plus l’Azure Function, mais que nous étions passés à Azure Databricks, nous avons pu créer notre compte de stockage dans le bloc-notes et sauver nos captures d’écran dans ce répertoire. Après avoir adapté notre code JavaScript, nous avons obtenu le même résultat que l’image ci-dessus.

Comment étiqueter les données pour former et tester notre modèle ?

Après avoir collecté toutes les données, nous avons dû affecter des informations pertinentes à ces données brutes (dans ce cas, les images) en les étiquetant.

Pendant le processus d’étiquetage, nous devions définir 2 étiquettes :

- piscine (1) ou

- pas de piscine (0).

Quand il y avait une piscine, nous attribuions l’étiquette 1, et quand il n’y en avait pas, l’étiquette 0. Nous nous sommes assurés que toutes les images portant la même étiquette étaient groupées dans le même sous-répertoire, afin de pouvoir les utiliser plus tard aux fins d’analyse des données dans l’étape suivante.

Comment apprendre à un ordinateur ce qu’est une piscine ?

Pour l’analyse des données, nous avons utilisé un réseau neuronal, et plus spécifiquement l’apprentissage par transfert (transfer learning). Ce type de réseau de neurones prend un modèle pré-entraîné qui pourrait être pertinent dans différentes situations, dans notre cas la détection d’images, et l’applique à cette nouvelle situation (détection de piscines). Nous avons utilisé le réseau résiduel Residual Network, ou ResNet, comme modèle pré-entraîné pour la détection de piscines. Ces réseaux ont une profondeur de 18 à 152 couches. Plus il y a de couches, plus le modèle pré-entraîné est complexe.

Après avoir formé le réseau neuronal sur notre jeu de données, nous avons remarqué que les résultats n’étaient pas aussi bons que nous l’espérions. À cause de ces résultats décevants, nous avons décidé d’adopter une autre approche.

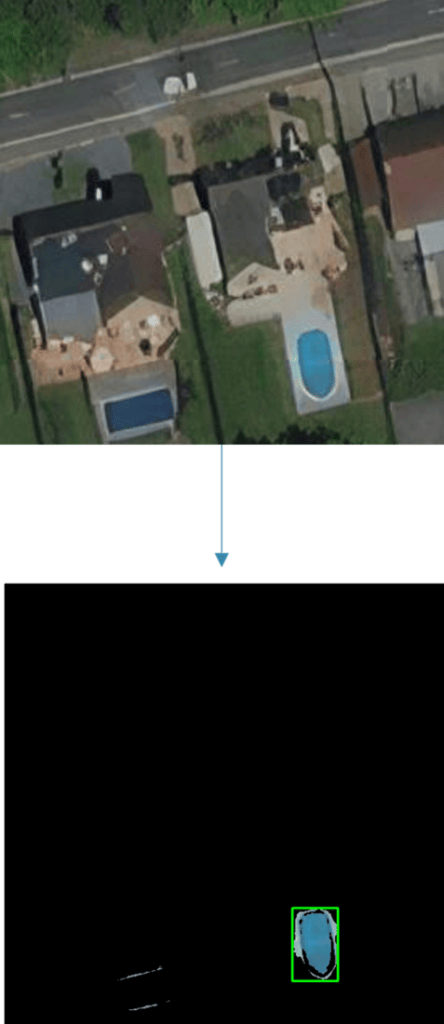

Cette fois, nous n’avons pas utilisé d’algorithmes d’apprentissage automatique, mais nous avons filtré la bonne couleur bleue de chaque image, comme le montre l’illustration ci-dessous. Quand il y avait assez de bleu dans une image, nous lui donnions l’étiquette 1 (piscine). Ce modèle a une précision de 85 % et peut prédire correctement la présence d’une piscine dans 80 % des cas et l’absence de piscine dans 90 % des cas. L’inconvénient est qu’il ne peut pas prédire les piscines couvertes.

Conclusion

Les images satellites peuvent être collectées dans un environnement de cloud Azure par Selenium via Databricks. Azure ML peut être utilisé pour étiqueter notre jeu de données et éventuellement entraîner un modèle pour faire des prévisions.

Essayer les différentes approches a été une expérience très instructive. Nous avons eu beaucoup de plaisir à réaliser ce projet et nous avons apprécié les discussions avec les collègues. Nous remercions Egon, Kenny, Esa et Antoine en particulier de nous avoir aidés à réaliser ce projet et d’avoir gentiment répondu à nos questions.

En savoir plus ?

Prenez contact avec nous !