VOO transforme ses services de BI et migre dans le Cloud – Article dans Solutions Magazine.

Résumé

VOO achève Memento, son programme de transformation business intelligence et big data avec, à la clé, une migration dans le cloud. Découvrez comment Micropole a accompagné l’opérateur durant les différentes phases du projet.

Les défis de VOO

Dans le contexte d’une transformation mondiale, Micropole a aidé VOO à mettre en œuvre une migration complète de sa Business Intelligence, de ses Big Data et de son paysage d’IA dans le cloud. Cette migration était essentielle pour répondre aux besoins stratégiques et urgents de l’entreprise :

- Augmenter drastiquement les informations sur les clients pour accélérer l’acquisition et améliorer la fidélité et la rétention ;

- Soutenir la transformation digitale en offrant une vision unifiée du client et de son comportement ;

- Relever les nouveaux défis liés à la conformité (RGPD) ;

- Diminuer radicalement le coût total de possession des environnements de données globaux (4 environnements de BI différents + 3 clusters Hadoop avant la transformation) ;

- Mettre en place une gouvernance des données à l’échelle de l’entreprise et traiter le « Shadow BI » (+ de 25 ETP dans l’entreprise pour le nettoyage et le traitement des données).

La solution et le résultat générés par Micropole

Micropole a procédé à une étude rapide, passé en revue tous les aspects de la transformation et relevé les défis organisationnels (rôles et responsabilités, équipes et compétences, processus, gouvernance) et techniques (scénarios architecturaux globaux, allant des solutions de cloud hybrides aux solutions de cloud complètes en mode PaaS, ou Platform-as-a-Service).

En se basant sur la conclusion de l’étude, Micropole a déployé une plateforme de données basée dans le cloud à l’échelle de l’entreprise permettant de combiner les processus BI classiques et des capacités analytiques de pointe. Micropole a aidé à redéfinir l’organisation des données et les processus connexes et a introduit la gouvernance des données au niveau de l’entreprise.

Le coût total de possession a chuté, il représente désormais moins du tiers de ce qu’il était, tandis que la capacité et l’agilité se sont nettement améliorées.

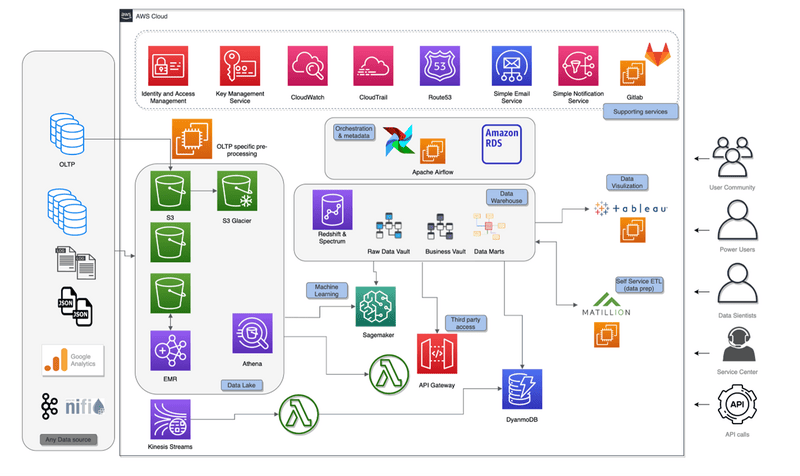

Architecture basée sur les services data clés d’AWS

Data Lake

Amazon S3 est utilisé pour la couche centrale d’entrée et pour la conservation sur le long terme.

Certains fichiers de données sont prétraités sur Amazon EMR. Les clusters EMR sont créés à la volée plusieurs fois par jour. Les clusters traitent uniquement les nouvelles données qui arrivent dans S3. Une fois les données traitées et stockées dans un format Apache Parquet optimisé pour l’analyse, le cluster est détruit. Le chiffrement et la gestion du cycle de vie sont activés sur la plupart des compartiments S3 pour répondre aux exigences de sécurité et de rentabilité. Plus de 600 To de données sont actuellement stockés dans le lac de données. Amazon Athena est utilisé pour créer et maintenir un catalogue de données et explorer les données brutes dans le lac de données.

Intégration en temps réel

Amazon Kinesis Data Streams capture les données en temps réel ; ces données sont filtrées et enrichies (avec les données de l’entrepôt de données) par une fonction Lambda avant d’être stockées dans une base de données Amazon DynamoDB. Les données en temps réel sont également stockées dans des compartiments S3 dédiés pour être conservées.

Entrepôt de données

L’entrepôt de données fonctionne sur Amazon Redshift, il utilise les nouveaux nœuds RA3 et suit la méthodologie Data Vault 2.0. Les objets Data Vault sont très standardisés et ont des règles de modélisation strictes, ce qui permet un haut niveau de standardisation et d’automatisation. Le modèle de données est généré à partir des métadonnées stockées dans une base de données Amazon RDS Aurora.

Le moteur d’automatisation lui-même est construit sur Apache Airflow, déployé sur des instances EC2.

La mise en œuvre du projet a débuté en juin 2017 ; le cluster Redshift de production initialement dimensionné sur 6 nœuds DC2 a évolué de manière transparente au fil du temps pour répondre aux besoins croissants des projets en matière de données et à l’ensemble des besoins métier.

DynamoDB

Amazon DynamoDB est utilisé pour des cas d’utilisation spécifiques où les applications web ont besoin de temps de réponse inférieurs à la seconde. L’utilisation de la capacité variable d’écriture/lecture de DynamoDB permet de provisionner la capacité de lecture haute performance, plus coûteuse, uniquement pendant les heures d’ouverture de l’entreprise, lorsqu’une faible latence et un temps de réponse rapide sont nécessaires. Ces mécanismes, qui reposent sur l’élasticité des services AWS, sont utilisés pour optimiser la facture mensuelle d’AWS.

Apprentissage machine

Une série de modèles prédictifs ont été mis en œuvre, allant d’un modèle de prédiction des désabonnements classique à des cas d’utilisation plus avancés. Par exemple, un modèle a été construit pour repérer les clients qui sont susceptibles d’avoir été touchés par une panne du réseau. Amazon SageMaker a été utilisé pour construire, former et déployer les modèles à l’échelle, en exploitant les données disponibles dans le Data Lake (Amazon S3) et l’entrepôt de données (Amazon Redshift).

API pour l’accès externe

Les parties externes doivent accéder à des ensembles de données spécifiques de manière sécurisée et fiable et Amazon API Gateway est utilisé pour déployer des API RESTful sécurisées au-dessus de microservices de données sans serveur mis en œuvre avec des fonctions Lambda.

Et bien plus encore !

La plateforme de données que Micropole a construite pour VOO offre des dizaines d’autres possibilités. La large panoplie de services disponibles dans l’environnement AWS permet de traiter de nouveaux cas d’utilisation chaque jour, de manière rapide et efficace.

Mentions dans la presse

General Manager

Micropole BeLux