BigLake : pourquoi et comment l'utiliser?

Les organisations voient leurs données se développer à un rythme accéléré. Et alors que les équipes élaborent des solutions avec les bons outils, les données se retrouvent souvent dans des endroits, des formats et même des plateformes cloud différents. Ces données de plus en plus distribuées conduisent à des silos, et les silos de données présentent leurs propres risques et problèmes. Pourtant, ces données ont une grande valeur analytique qui peut répondre aux nouveaux cas d’usages client toujours plus exigeants.

Pour évoluer dans un monde numérique en pleine croissance, les entreprises doivent éliminer les silos de données et permettre de nouveaux cas d’usages analytiques, quel que soit l’endroit où les données sont stockées ou leur format.

Pour combler ce fossé entre les données et la valeur ajoutée, Google a introduit une nouvelle fonctionnalité : BigLake. BigLake s’appuie sur les années d’innovation de BigQuery, c’est un moteur de stockage qui unifie les data lakes et les data warehouses, tout en offrant un contrôle d’accès à haute granularité, une accélération des performances sur le stockage multi-cloud et la prise en charge des formats de fichiers ouverts.

Architecture - Qu'est-ce que BigLake ?

En créant des tables BigLake dans BigQuery ou en utilisant le connecteur BigLake sur des moteurs open-source, tels qu’Apache Spark, vous pouvez étendre votre accès aux données dans Amazon S3, Azure Data Lake Storage, et bien sûr Google Cloud Storage.

Les données sont accessibles via les formats de données ouverts pris en charge : Avro, CSV, JSON, ORC et Parquet. Et depuis, Google Cloud Next 2022, également : Apache Iceberg, Delta Lake et Apache Hudi.

BigLake étend le contrôle d’accès de sécurité à haute granularité de BigQuery au niveau de la table, de la ligne ou de la colonne. Grâce à BigQuery Omni, cette politique de sécurité est régie de manière cohérente sur d’autres plates-formes cloud. Elle permet l’interopérabilité entre les data warehouses et les data lakes en gérant une copie unique des données.

Comme les tables BigLake sont un type de tables BigQuery, elles peuvent également être découvertes de manière centralisée dans le catalogue de données et gérées à l’échelle à l’aide de Dataplex : unifier la gouvernance et la gestion à l’échelle.

Démo - Comment utiliser BigLake ?

Dans cette démo d’introduction, nous allons explorer les résultats d’une compétition internationale de triathlon. Le fichier contient les informations suivantes sur les participants :

- ID

- Nom

- Pays

- Durée en minutes

Le fichier est stocké dans Google Cloud Storage. Voyons qui a le temps le plus rapide tout en préservant la vie privée des participants.

1. Tout d'abord, créer une connexion

La première chose à faire est de créer une connexion externe à nos données. La connexion BigLake à Google Cloud Storage peut être créée sur la page du service BigQuery.

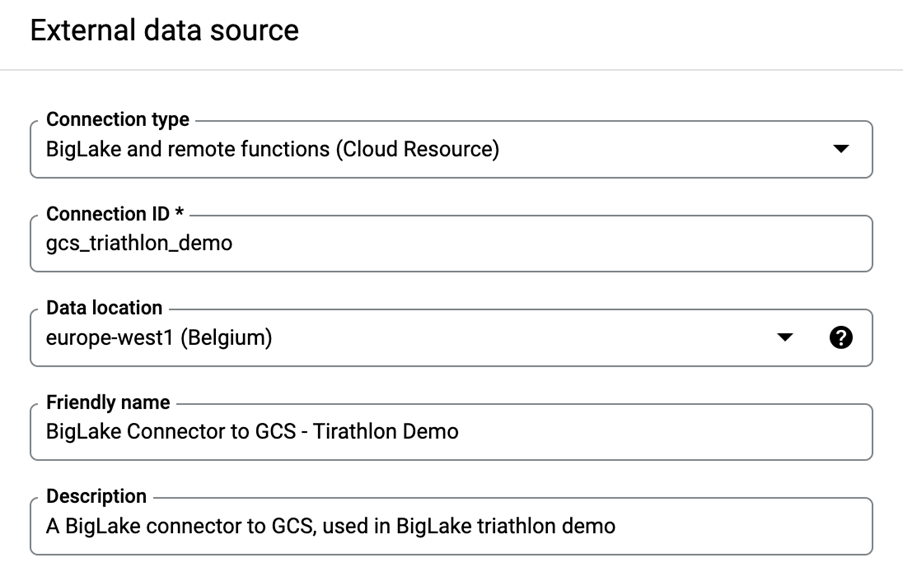

En haut à gauche, cliquez sur “+ ADD DATA”. Une nouvelle fenêtre apparaîtra à droite, cliquez sur “Connexions aux sources de données externes”, et remplissez les champs suivants :

- Type de connexion : choisissez “BigLake et fonctions à distance”.

- ID de la connexion : “gcs_triathlon_demo”. 3.

- Emplacement des données : “europe-west1 (Belgique)”

- Nom et description de l’ami : facultatifs, mais utiles.

- Cliquez sur “CREER UNE CONNEXION”.

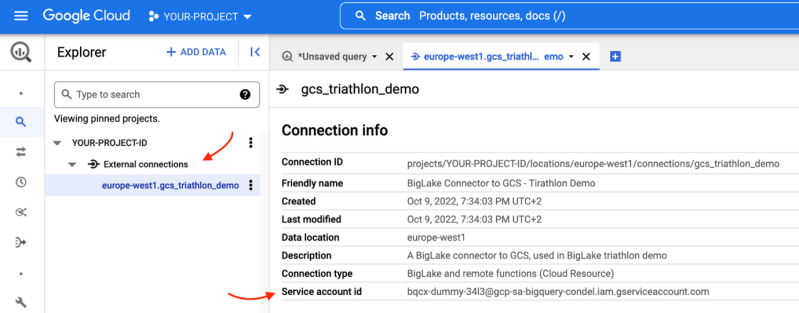

Lorsque la connexion est créée, nous pouvons la visualiser dans le volet de gauche, sous “External connections” :

Copions l'”ID du compte de service”, car nous aurons besoin d’accorder la permission de lecture à ce compte de service sur le stockage d’objets.

2. Accorder au compte de service un accès en lecture

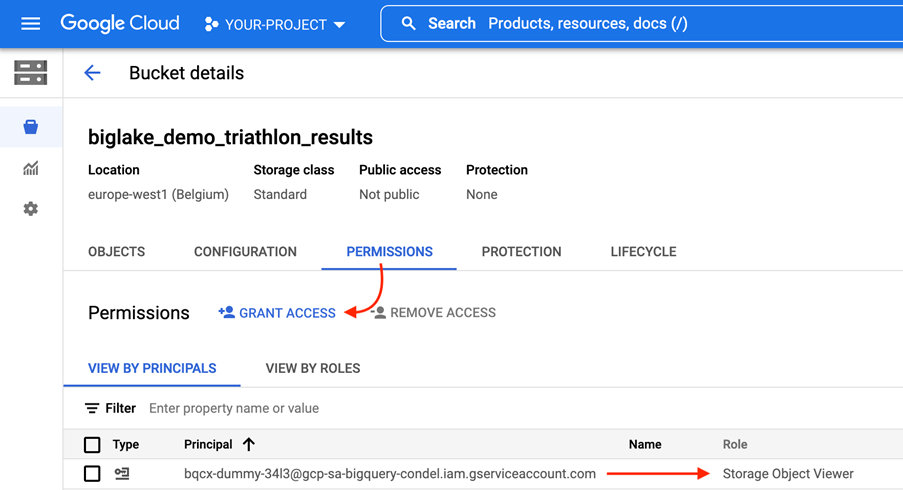

Suivant le principe du moindre privilège, n’accordez pas de permissions globales aux comptes de service qui n’en ont pas besoin. Dans notre cas, nous allons accorder l’autorisation de visualisation d’objet au niveau du bucket.

Naviguez vers la page Google Cloud Storage et accordez des autorisations de lecture au bucket contenant notre fichier de données.

Notre fichier est stocké dans le bucket appelé “biglake_demo_triathlon_results”. Nous allons ouvrir le bucket, et naviguer vers l’onglet “PERMISSIONS”. Ici, nous allons accorder “Storage Object Viewer” au compte de service de connexions « bqcx-dummy-34l3@gcp-sa-bigquery-condel.iam.gserviceaccount.com »

3. Créer la table BigLake

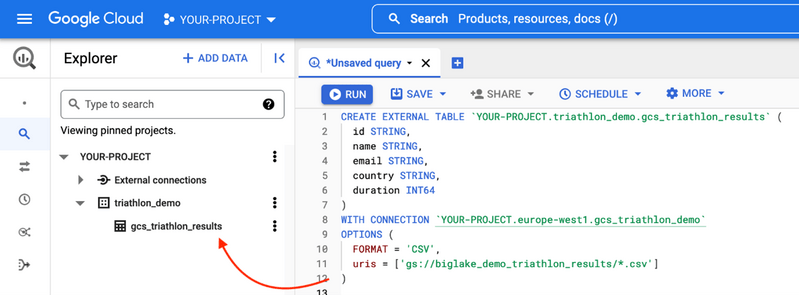

L’étape suivante consiste à créer notre table BigLake dans un ensemble de données appelé “triathlon_demo”, il est situé dans la région “europe-west1”, la même région où se trouvent nos données.

Au lieu de créer la table BigLake via l’interface utilisateur, nous allons changer les choses et la créer via SQL, en utilisant notre connexion BigLake :

CREATE EXTERNAL TABLE `YOUR-PROJECT.triathlon_demo.gcs_triathlon_results` (

id STRING,

nom STRING,

email STRING,

pays STRING,

durée INT64

)

— Our BigLake to GCS connector

WITH CONNECTION `Your-Project.europe-west1.gcs_triathlon_demo`

OPTIONS (

FORMAT = ‘CSV’,

uris = [‘gs://biglake_demo_triathlon_results/*.csv’]]

)

BigQuery prend en charge le chargement de plusieurs fichiers sources dans une seule table. Pour ce faire, utilisez le caractère générique (“\*”).

L’exécution de la requête ci-dessus crée la table BigLake :

4. Protéger les informations personnelles des participants

Avec Dataplex , nous pouvons intégrer de manière transparente une gouvernance et une gestion unifiées à l’échelle de notre BigTable. Dans cette démo, nous allons voir comment nous pouvons restreindre l’accès au niveau des colonnes en utilisant des taxonomies.

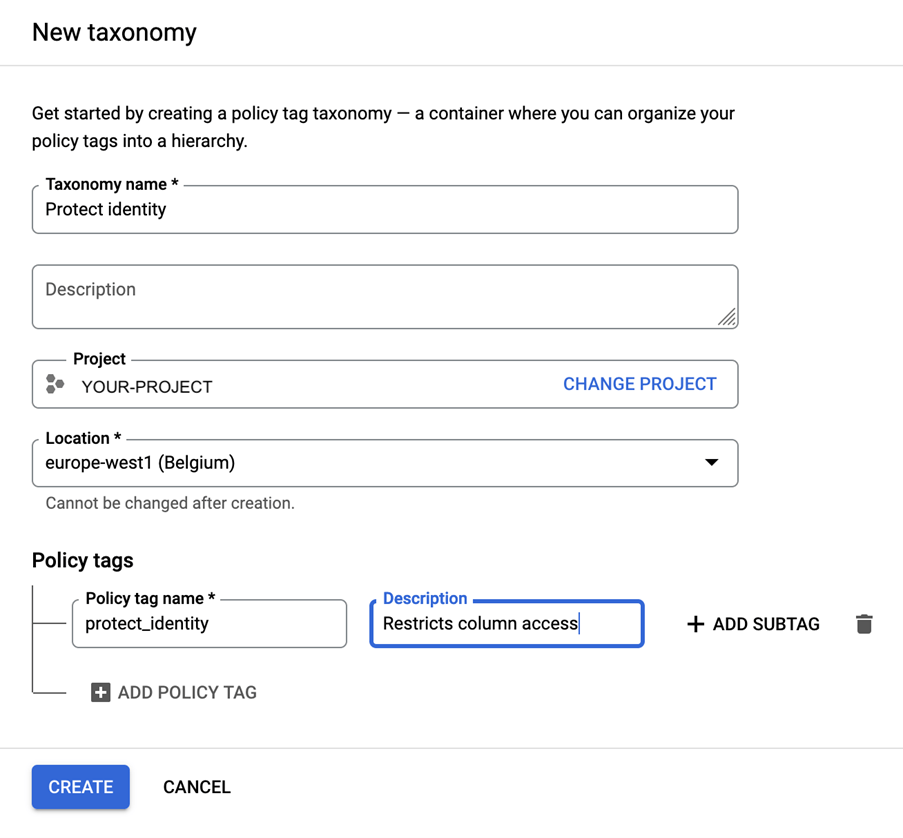

Pour créer une politique de tag, une taxonomie doit d’abord être définie, ce qui est fait via la page Taxonomie de Dataplex.

Cliquez sur “CREER UNE NOUVELLE TAXONOMIE” et nous allons remplir le formulaire :

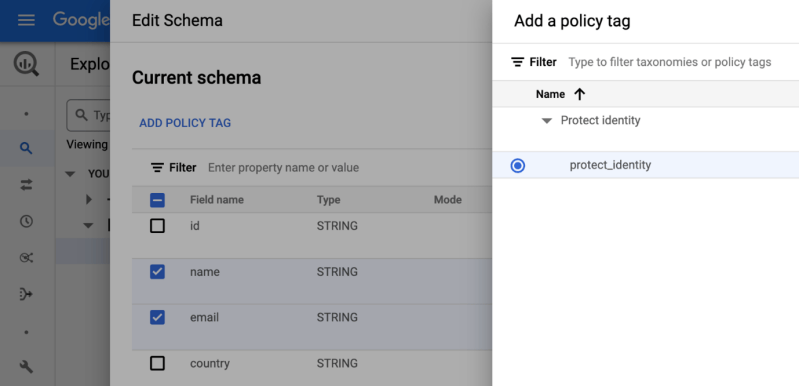

Une fois créé, appliquons-le aux colonnes que nous voulons restreindre et qui contiennent des informations sensibles. Retournez dans BigQuery et sélectionnez notre table BigLake. Cliquez sur “EDIT SCHEMA”, sélectionnez les colonnes “name” et “email”, et cliquez sur “ADD POLICY TAG”. Sélectionnez la politique et enregistrez les modifications.

Maintenant les colonnes sont protégées. Testons cela !

5. Résultat : Qui a le meilleur temps ?

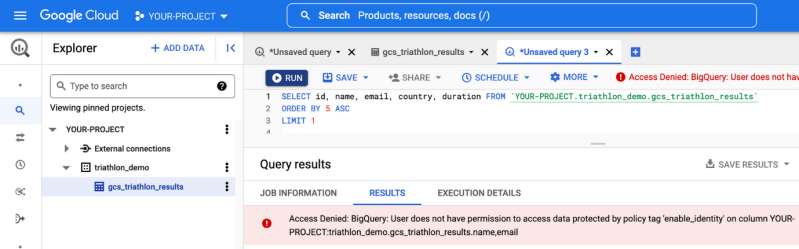

Quand tout est configuré, et que la politique du tag a été appliquée, découvrons qui a été le plus rapide.

Lorsque nous exécutons la requête, nous sommes accueillis par un message nous indiquant que nous n’avons pas la permission d’afficher les noms ou les e-mails des participants. Notre politique fonctionne donc !

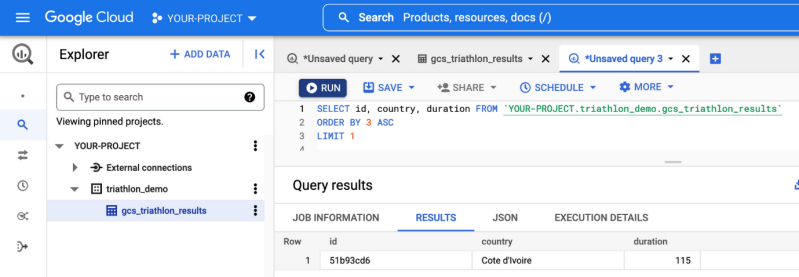

En excluant ces champs de la requête, nous pouvons enfin voir le résultat :

Le participant inconnu ID’ed “51b93cd6” de la Côte d’Ivoire a le temps de course le plus court de 115 minutes.

6. Conclusion

BigLake réduit l’écart entre la valeur ajoutée et les données en éliminant les silos de données sur les plateformes cloud, tout en prenant en charge le contrôle d’accès à haute granularité et la gouvernance multiple des données distribuées. C’est un outil qui s’intègre bien dans une organisation multi-cloud.

Principaux enseignements de BigLake

– Gestion d’une copie unique des données avec un ensemble de fonctionnalités uniformes dans les data lakes et les data warehouses.

– Contrôle d’accès à haute granularité sur la plateforme multi-cloud.

– Intégration avec les logiciels open-source et prise en charge des formats de données ouverts.

– Avantages au niveau de BigQuery

En savoir plus ?

Prenez contact avec nous !