Introduction

Après que Microsoft a annoncé Microsoft Fabric lors de la conférence Build qui débutera le 23 mai 2023, Microsoft Fabric a été lancé en avant-première publique. Il dispose de nombreuses fonctionnalités pour unifier votre domaine de données et remodeler la façon dont l’ensemble de votre équipe consomme des données. Construit entièrement sur delta lake, le moteur serverless amélioré fera quelques vagues dans le monde des données.

Voici comment connecter votre base de données Microsoft Fabric KQL (Kusto Query Language) au streaming Azure Event Hubs. Vous pouvez combiner ces fonctionnalités pour diffuser vos données vers un magasin KQL dans Microsoft Fabric. Ce ne sera pas la seule source ou destination comme nous le verrons plus loin dans ce billet.

Composants dont vous aurez besoin:

- Abonnement Azure

- Event Hub namespace + Event hub

- Compte Microsoft Fabric (version d’essai)

- Streaming dataset (si vous utilisez un dataset généré de manière intégrée, vous pouvez sauter cette étape)

Pour l’espace de noms du hub d’événements, vous pouvez utiliser le niveau de tarification de base, mais vous risquez de vous heurter à la limitation du groupe de consommateurs (1 groupe de consommateurs possible). Vous ne verrez plus d’informations sur le groupe de consommateurs $Default dans le portail une fois que vous aurez connecté votre Microsoft Fabric KQL DB au groupe de consommateurs.

Vous pouvez encore modifier cela après avoir créé l’espace de noms du hub d’événements.

Analyse en temps réel

Étape 1 - Mise en place

Utilisez votre navigateur préféré pour vous connecter à https://app.fabric.microsoft.com/

Nous utiliserons principalement l’onglet “Real-Time Analytics” (le dernier). Sélectionnez-le pour accéder à la page d’accueil de RTA.

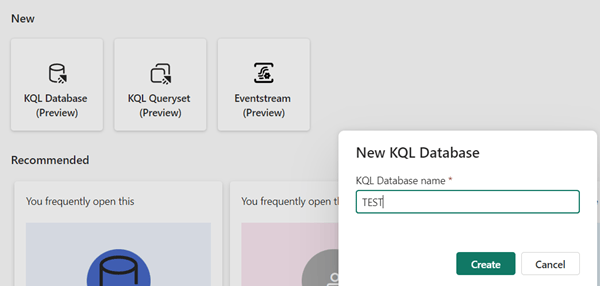

A partir de là, nous pouvons facilement créer une nouvelle base de données KQL en sélectionnant le bouton KQL Database (Preview) en haut à gauche et en fournissant un nom significatif. (TEST est significatif, n’est-ce pas ?).

Après cela, vous devriez voir votre base de données ouverte dans l’onglet Real-Time Analytics.

Si vous avez perdu de vue l’emplacement de la base de données KQL que vous venez de créer, il vous suffit de revenir à l’onglet de votre espace de travail et d’appliquer quelques filtres. Par exemple sur le nom ou sur la base de données KQL (ou les deux).

Étape 2 - Commencer à ingérer des données

C’est maintenant qu’il faut commencer à ingérer des données dans la base de données KQL.

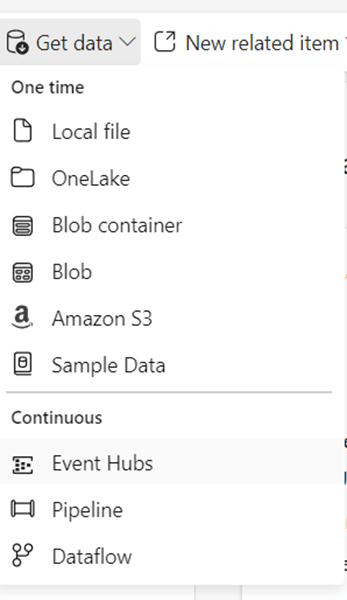

Nous pouvons soit sélectionner get data –> Event Hubs (voir l’image à droite) ou…

… créer un tableau !

Ensuite, il y a une étape importante de connexion à notre espace de nommage Event Hubs + Event Hub que nous devons mettre en place.

Étape 3 - Connexion au cloud

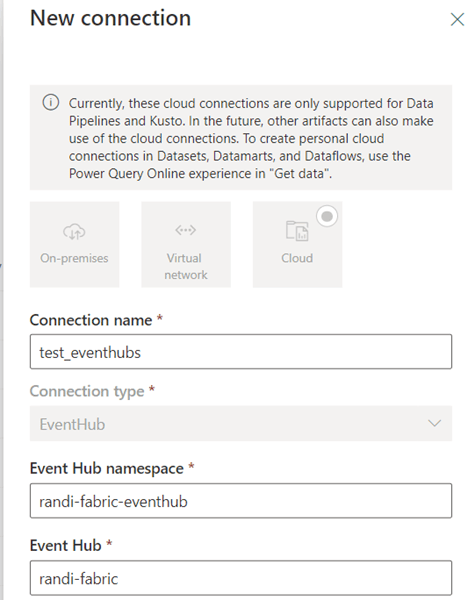

Dans l’onglet Source de données Event Hubs, sélectionnez Créer une nouvelle connexion cloud.

Cela ouvrira un autre onglet du navigateur (qui se fermera automatiquement, voir mon article de blog précédent “5 conseils pour démarrer avec Microsoft Fabric” sur la façon de gérer les connexions sans que la fermeture automatique ne se produise.

La première partie est très simple, vous choisissez un nom de connexion, en espérant qu’il soit plus significatif que le mien. Ensuite, vous entrez l’Azure Event Hub namespace + Azure event hub appartenant à l’espace de noms auquel vous voulez vous connecter.

Étape 4 - Authentification

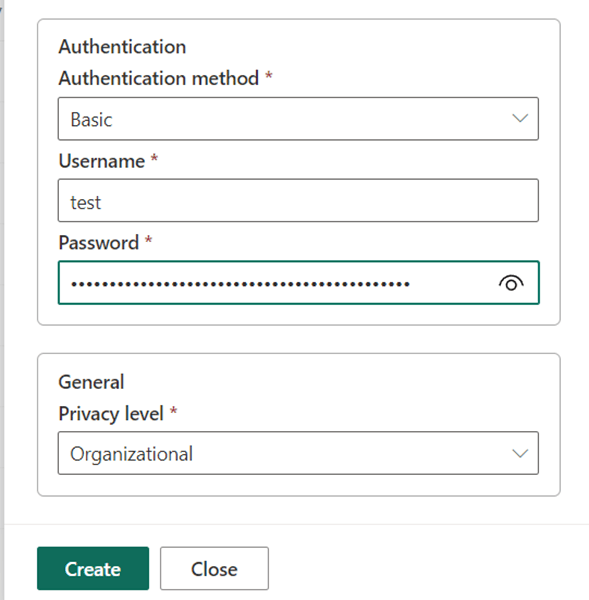

Pour l’authentification de base (qui est la seule possible actuellement), vous devrez entrer une politique d’accès partagé. Pour ce faire, vous utiliserez le nom de la politique et la valeur de la clé (primaire ou secondaire) qui lui appartient. Le nom de la politique = nom d’utilisateur, la valeur de la clé = mot de passe.

Vous pouvez utiliser la politique par défaut de l’espace de noms, mais il est conseillé d’en créer une pour le hub spécifique auquel vous vous connectez. Vous n’avez également besoin que du droit d’écoute sur ce hub d’événements. Nous espérons avoir plus de méthodes d’authentification dans le futur, mais ceci est l’approche la moins privilégiée.

Étape 5 - Préparer un ensemble de données

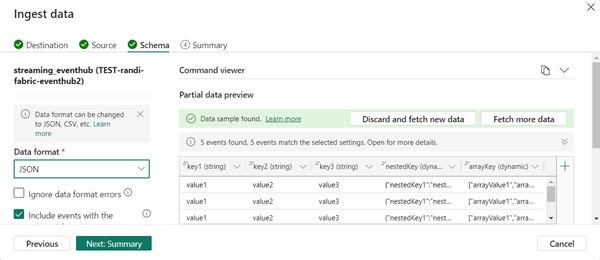

Après avoir effectué cette opération, assurez-vous d’abord que vous disposez d’un échantillon de données pour ce groupe de consommateurs avant de passer au schéma. Dans l’onglet Schéma, fabric essaiera immédiatement d’utiliser les données du concentrateur d’événements pour créer un schéma.

Si vous n’avez pas de données prêtes, vous pouvez essayer d’utiliser la fonction de prévisualisation pour générer des données dans le hub d’événements lui-même.

Étape 6 - Finalisation

Ces données étant prêtes, nous pouvons aller dans schema et voir notre schéma en cours de construction pour notre hub d’événements :

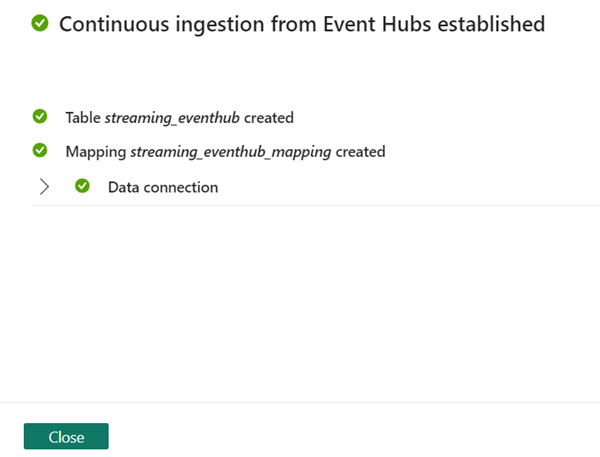

Assurez-vous de sélectionner le bon format de données ici. Lors du test, le format par défaut était TXT alors qu’il s’agissait de données JSON. Allez dans Résumé et vous devriez voir des coches vertes partout.

Après cela, vous avez terminé ! Vous devriez voir vos données Event Hub se remplir dans votre base de données KQL, vous pouvez facilement interroger la table en sélectionnant “…” –> “Query Table” –> “Show Any 100 Records” ou “Records ingested in the last 24 hours”.

En conclusion

Bien que cette configuration fonctionne pour le streaming des données de votre hub d’événements vers une base de données KQL, il y a moins de visibilité sur la source et la destination cible.

Un autre problème est qu’il n’y a pas de moyen plus facile de configurer des sources ou des cibles multiples. Comme nous le verrons dans mon prochain article de blog, il y a aussi plus de possibilités pour ingérer les données de l’Event Hubs vers un Lakehouse par exemple. Pour être en mesure de faire tout cela et plus encore, gardez un œil sur mon prochain article de blog sur : “Utiliser Eventstreams pour alimenter les données de votre hub d’événements vers la base de données KQL de Microsoft Fabric ou Lakehouse”.